Blog Credit: Trupti Thakur

Image Courtesy: Google

NextGen AI Assistants

At Google I/O 2024, the industry learned about new and advanced developments in artificial intelligence, especially in how people and computers can talk to each other. Google and OpenAI, known for Chat GPT, have released new AI assistants called Project Astra and GPT-4o. These new assistants claim to be more flexible and useful.

What is Project Astra?

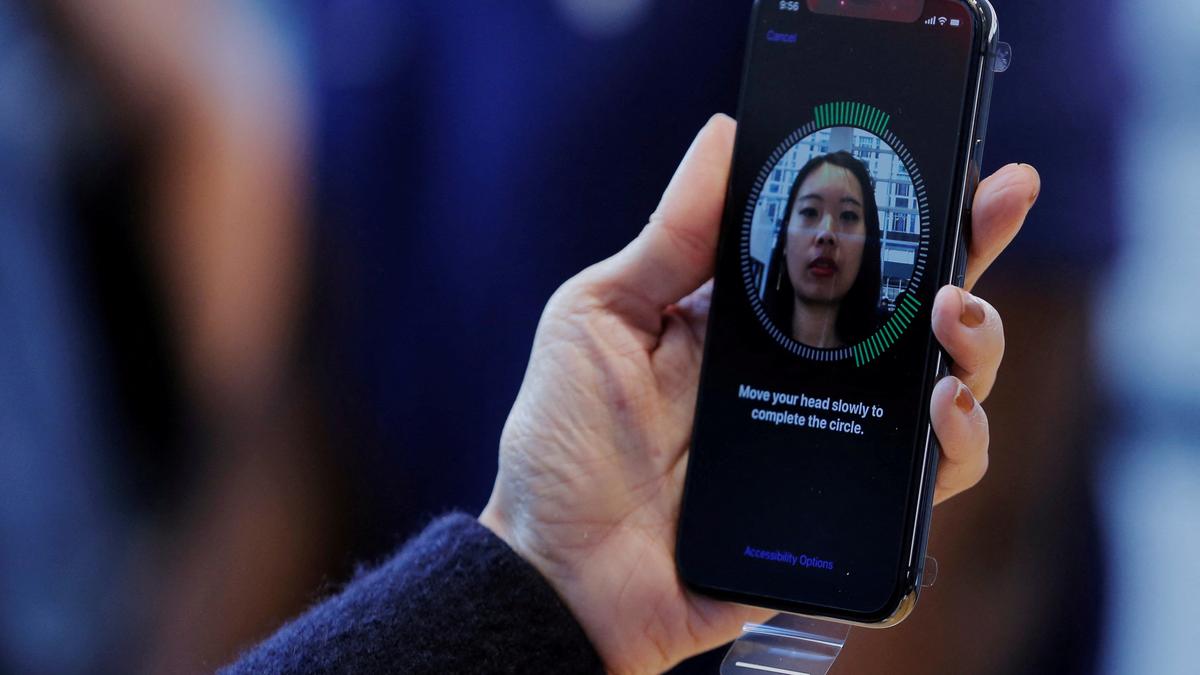

The goal of Google’s Project Astra is to change the way people connect with AI by adding multimodal language support to smart glasses and smartphones. With this change, users can now interact with their AI through talk, text, and images (photos and videos). Using the real-time data capture features of device cameras, the AI will be able to access information on the internet and learn from its surroundings, basically functioning as a personal assistant. The technology is meant to be as smart and useful as the AI helper in the hit movie Avengers: Infinity War.

The Innovation Behind Google’s Gemini

Google’s Gemini is the basis for Project Astra. Gemini is a multimodal foundation model that lets the AI handle and understand different types of input at the same time. During the Google I/O presentation, products like the Google Pixel phone and prototype smart glasses that used this technology were shown off. These devices could understand continuous streams of audio and video data, letting people connect with their surroundings and have conversations with other people in real time.

OpenAI’s Approach with GPT-4o

Along with Google’s announcement, OpenAI released GPT-4o, where “o” stands for “omni,” a model that can understand and do many things, such as translating languages, handling math problems, and fixing bugs in code. GPT-4o was first shown off on smartphones, but it is said to have similar skills to those shown by Google’s Project Astra.

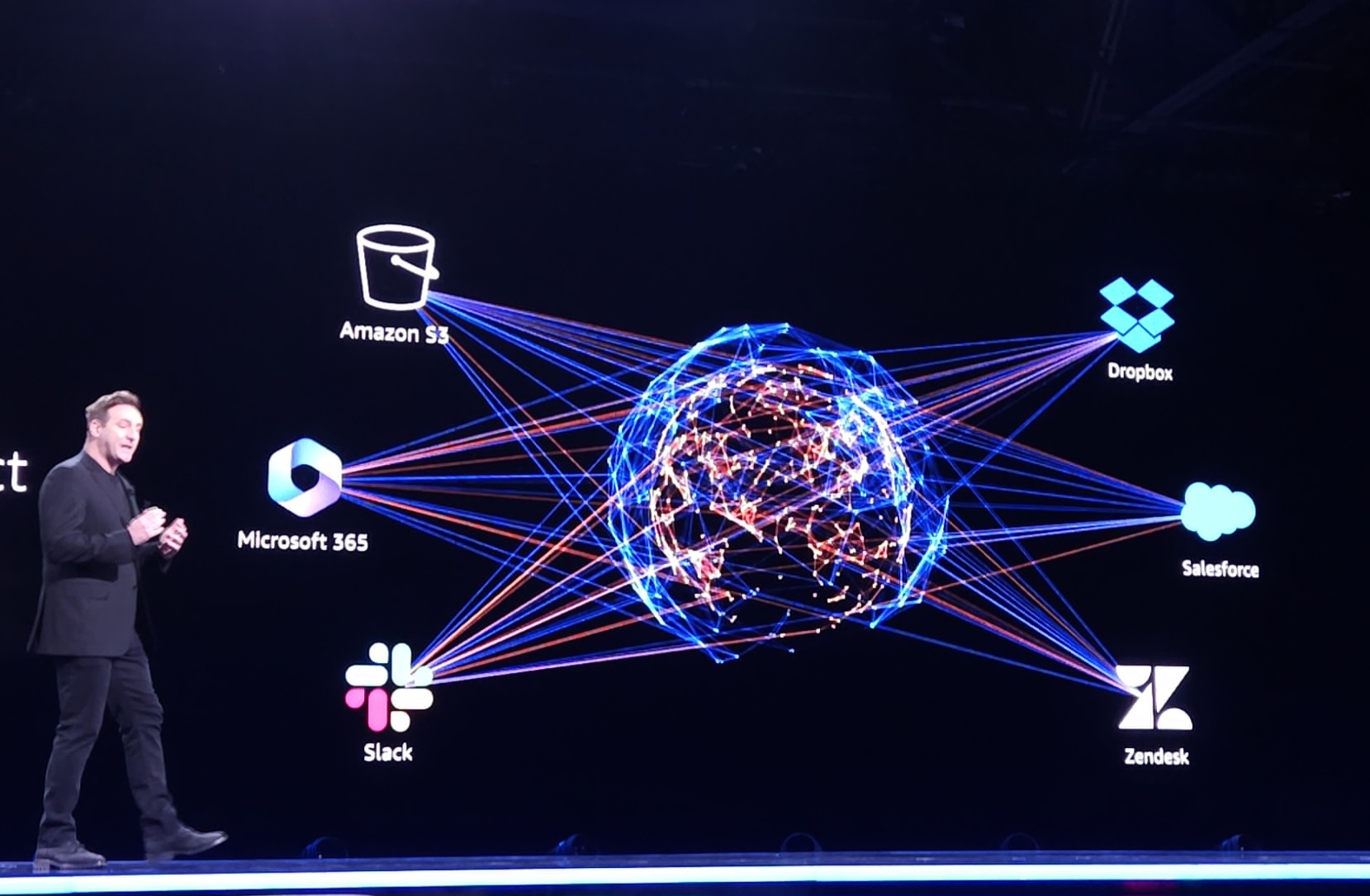

More About multimodal AI language

A lot of AI language models, like OpenAI’s GPT-4 or Google’s PaLM, combine text with other types of data, like pictures and sounds, to make interpretation and generation more useful. These models use methods like transformer structures to handle different types of data at the same time. They make complex jobs easier, like answering visual questions or analyzing audio sentiment. They also improve accessibility technology, like making descriptive audio for people who are blind or have low vision. Multimodal systems need a lot of computing power and a huge amount of data to learn on, which makes advanced GPUs and large-scale storage solutions even more important. Dealing with data errors and protecting privacy while merging data are also examples of innovations.

Blog By: Trupti Thakur

24

MayNextGen AI Assistants

May 24, 2024Recent Blog

The New Accessibility Feature of AppleMay 14, 2025

The Digital Threat Report 2024May 13, 2025

The MADMAX ExperimentMay 12, 2025

The EntraID Data ProtectionMay 10, 2025

The MAHA-EVMay 07, 2025