Blog Credit: Trupti Thakur

Image Courtesy: Google

Nvidia’s Rubin

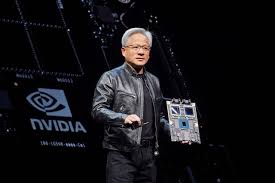

Nvidia Corp., a leader in making AI chips, has stated that it is making a new AI chip platform called Rubin. It will be available in 2026. CEO Jensen Huang made the news while giving a talk at the Computex trade show at National Taiwan University in Taipei.

The Rubin Platform

An new set of CPUs, GPUs, and networking processors will make up the Rubin platform. The goal is to make AI much more powerful. The Versa CPU, which was made to improve AI applications, is an important addition. The platform will also have next-generation GPUs that use high-bandwidth memory technologies from big names in the industry like Samsung, Micron, and SK Hynix.

Strategic Release Schedule

Nvidia plans to release a new family of AI chips every year, up from every other year, which is a big change from the way it used to do things. This change shows that Nvidia is committed to keeping up with the fast pace of progress in AI technology and staying ahead of the competition.

Market Dominance and Strategic Intentions

Currently, Nvidia has about an 80% piece of the market for AI Chips. Adding the Rubin platform and the new yearly release schedule are both parts of Nvidia’s plan to strengthen its market position and keep driving innovation in the AI field.

Impact on the AI Industry

Nvidia wants to meet the growing need for strong AI solutions in many areas, such as data centers and self-driving cars, through the Rubin platform. It is believed that adding high-bandwidth memory to these AI chips will make them much faster. The tech industry is looking forward to big improvements in 2026 thanks to Nvidia’s constant innovation, which will be helped by the new yearly release schedule. To sum up, Nvidia’s Rubin platform is a big step forward in AI technology. It also sets the company up to make big changes in the AI industry through better features and a focused market strategy.

Huang also detailed plans for an annual tick-tock-style upgrade cycle of its AI acceleration platforms, mentioning an upcoming Blackwell Ultra chip slated for 2025 and a subsequent platform called “Rubin” set for 2026.

Nvidia unveils Blackwell B200, the “world’s most powerful chip” designed for AI

Nvidia’s data center GPUs currently power a large majority of cloud-based AI models, such as ChatGPT, in both development (training) and deployment (inference) phases, and investors are keeping a close watch on the company, with expectations to keep that run going.

During the keynote, Huang seemed somewhat hesitant to make the Rubin announcement, perhaps wary of invoking the so-called Osborne effect, whereby a company’s premature announcement of the next iteration of a tech product eats into the current iteration’s sales. “This is the very first time that this next click as been made,” Huang said, holding up his presentation remote just before the Rubin announcement. “And I’m not sure yet whether I’m going to regret this or not.”

Nvidia Keynote at Computex 2023.

The Rubin AI platform, expected in 2026, will use HBM4 (a new form of high-bandwidth memory) and NVLink 6 Switch, operating at 3,600GBps. Following that launch, Nvidia will release a tick-tock iteration called “Rubin Ultra.” While Huang did not provide extensive specifications for the upcoming products, he promised cost and energy savings related to the new chipsets.

During the keynote, Huang also introduced a new ARM-based CPU called “Vera,” which will be featured on a new accelerator board called “Vera Rubin,” alongside one of the Rubin GPUs.

Much like Nvidia’s Grace Hopper architecture, which combines a “Grace” CPU and a “Hopper” GPU to pay tribute to the pioneering computer scientist of the same name, Vera Rubin refers to Vera Florence Cooper Rubin (1928–2016), an American astronomer who made discoveries in the field of deep space astronomy. She is best known for her pioneering work on galaxy rotation rates, which provided strong evidence for the existence of dark matter.

Nvidia’s reveal of Rubin is not a surprise in the sense that most big tech companies are continuously working on follow-up products well in advance of release, but it’s notable because it comes just three months after the company revealed Blackwell, which is barely out of the gate and not yet widely shipping.

At the moment, the company seems to be comfortable leapfrogging itself with new announcements and catching up later; Nvidia just announced that its GH200 Grace Hopper “Superchip,” unveiled one year ago at Computex 2023, is now in full production.

With Nvidia stock rising and the company possessing an estimated 70–95 percent of the data center GPU market share, the Rubin reveal is a calculated risk that seems to come from a place of confidence. That confidence could turn out to be misplaced if a so-called “AI bubble” pops or if Nvidia misjudges the capabilities of its competitors. The announcement may also stem from pressure to continue Nvidia’s astronomical growth in market cap with nonstop promises of improving technology.

Accordingly, Huang has been eager to showcase the company’s plans to continue pushing silicon fabrication tech to its limits and widely broadcast that Nvidia plans to keep releasing new AI chips at a steady cadence.

“Our company has a one-year rhythm. Our basic philosophy is very simple: build the entire data center scale, disaggregate and sell to you parts on a one-year rhythm, and we push everything to technology limits,” Huang said during Sunday’s Computex keynote.

Despite Nvidia’s recent market performance, the company’s run may not continue indefinitely. With ample money pouring into the data center AI space, Nvidia isn’t alone in developing accelerator chips. Competitors like AMD (with the Instinct series) and Intel (with Gaudi 3) also want to win a slice of the data center GPU market away from Nvidia’s current command of the AI-accelerator space. And OpenAI’s Sam Altman is trying to encourage diversified production of GPU hardware that will power the company’s next generation of AI models in the years ahead.

Blog By: Trupti Thakur

05

JunNvidia’s Rubin

Jun 05, 2024Recent Blog

Vikram 3201 & Kalpana 3201Apr 04, 2025

The Open Weight Language ModelApr 03, 2025

Asia Cup 2025Apr 02, 2025

The CrocodilusApr 01, 2025

SARATHIMar 31, 2025