Blog By: Priyanka Rana

Detect Duplicate Websites

In the dynamic realm of cybersecurity, where the digital landscape is constantly evolving, the detection of duplicate websites emerges as a critical defense against a myriad of threats. This blog aims to delve into the significance of website duplicate detection, shedding light on the risks posed by cloned sites and exploring advanced strategies to fortify cyber defenses.

The Pervasive Threat of Duplicate Websites:

Duplicate or cloned websites are a breeding ground for malicious activities. Cybercriminals use these replicas to launch phishing attacks, distribute malware, and deceive unsuspecting users. Identifying and mitigating these duplicate sites is imperative to prevent data breaches, financial losses, and the erosion of trust in online platforms.

The Domino Effect of Website Duplication:

Just as with duplicate files, the replication of websites sets off a domino effect of security risks. From stealing sensitive information to tarnishing the reputation of legitimate entities, duplicate websites serve as a gateway for cyber threats to proliferate, necessitating robust duplicate detection mechanisms.

Key Risks Associated with Duplicate Websites:

- Phishing Attacks: Cybercriminals often duplicate legitimate websites to conduct phishing attacks, tricking users into divulging sensitive information such as login credentials, financial details, or personal data.

- Malware Distribution: Duplicate websites can be used as conduits for malware distribution. Users accessing these sites may inadvertently download malicious software, compromising the security of their devices.

- Brand Impersonation: Cloned websites are frequently employed in brand impersonation schemes. This can damage the reputation of legitimate businesses and lead to financial losses as users are deceived into engaging with fraudulent entities.

- SEO Manipulation: Duplicate content across websites can impact search engine rankings and result in unintended consequences such as reduced visibility for legitimate sites or even blacklisting by search engines.

Strategies for Effective Duplicate Website Detection:

- Content Hashing: Utilizing cryptographic hashing algorithms to generate unique fingerprints for website content facilitates quick and reliable identification of duplicate pages or entire sites.

- Web Crawling and Comparison: Regularly crawling the web and comparing website structures, HTML code, and metadata helps identify similarities and anomalies indicative of duplicate sites.

- Machine Learning Algorithms: Advanced machine learning models can be trained to recognize patterns associated with duplicate websites, enabling automated detection and response to emerging threats.

- Digital Certificates and SSL/TLS: Monitoring digital certificates and ensuring the proper implementation of SSL/TLS protocols can aid in identifying duplicate websites, as cybercriminals often neglect these security measures.

- User Education: Empowering users to recognize and report suspicious websites enhances the collective defense against duplicates. Educating users about secure online practices and the red flags associated with phishing can be a powerful preventive measure.

Conclusion:

Duplicate website detection stands at the forefront of cybersecurity efforts, acting as a sentinel against an array of online threats. By leveraging advanced technologies, robust algorithms, and a proactive cybersecurity strategy, organizations can safeguard their online presence, protect users, and fortify the digital frontier against the ever-evolving landscape of cyber threats. As we navigate the complexities of cyberspace, prioritizing duplicate website detection becomes imperative for ensuring a secure and trustworthy online environment.

16

JanDetect Duplicate Websites

Jan 16, 2024Recent Blog

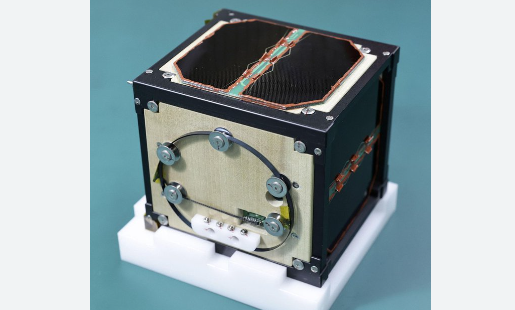

The ‘LignoSat’Nov 12, 2024

India’s First Writer’s VillageOct 28, 2024

The First Tri-Fold Of SamsungOct 24, 2024

India’s New Spam Tracking SystemOct 23, 2024

ICC T20 Women’s World Cup Winner 2024Oct 22, 2024